Tencent Open-Sources HY-Motion 1.0: A Billion-Parameter Text-to-Motion AI Model

Tencent has released HY-Motion 1.0, a new open-source text-to-motion generation model with over one billion parameters, marking a major step forward for AI-driven animation and 3D content creation.

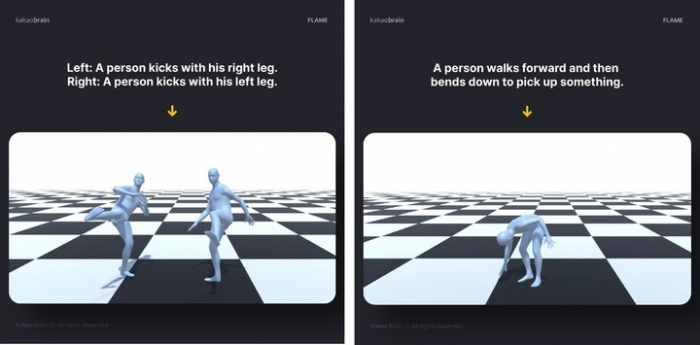

HY-Motion 1.0 allows users to generate high-quality 3D skeletal motion directly from natural-language prompts such as “a person plays the piano” or “a character performs a jazz dance.” The resulting motion data can be integrated into common 3D animation workflows, including game engines and animation software.

What Is HY-Motion 1.0?

HY‑Motion 1.0 is a large-scale generative AI model designed specifically for human motion synthesis. Unlike traditional animation pipelines that rely heavily on manual keyframing or motion-capture sessions, HY-Motion converts text descriptions into realistic motion automatically.

The model is based on a Diffusion Transformer (DiT) architecture combined with flow-matching techniques, enabling smoother, more natural movements and better alignment with textual instructions.

Key Features and Capabilities

Billion-Parameter Scale

HY-Motion 1.0 is currently the largest open-source text-to-motion model, surpassing previous community models in both size and motion fidelity.

Broad Motion Coverage

The model supports 200+ motion types across multiple categories, including:

- Daily activities

- Sports and fitness

- Dance and performance

- Social interactions

- Locomotion and gestures

High-Quality Training Pipeline

Tencent trained HY-Motion using a full-stage process, including:

- Large-scale pretraining on extensive motion datasets

- Fine-tuning with curated, high-quality animations

- Human-feedback-based optimization to improve realism and instruction following

Open-Source and Developer-Friendly

Tencent has made HY-Motion 1.0 fully open-source, releasing both the model weights and code. Developers can run it locally, experiment with different prompts, or integrate it into existing pipelines for:

- Game development

- Virtual characters and avatars

- Film and animation previsualization

- Research and academic projects

Community discussions highlight that the model is available in multiple sizes, including lighter versions that reduce hardware requirements while maintaining good quality.

Why This Matters

Text-to-motion technology has long been limited by proprietary tools and expensive motion-capture setups. With HY-Motion 1.0, Tencent lowers the barrier to entry, giving indie developers, researchers, and creators access to advanced motion generation that was previously out of reach.

As generative AI continues to expand beyond text and images, models like HY-Motion signal a future where animation, games, and virtual worlds can be built faster and more creatively than ever before.